Douglass North on the role of institutions in our society, part 2. "Understanding the process of economic change". Also, "Violence and Social Orders". American occupations of Germany, Japan, and Afghanistan and Iraq are case studies of institutions at work.

In part 1, I discussed the role of ideology and thought patterns in the context of institutional economics, which is the topic of North's book. This post will look at the implications for developmental economics. In this modern age, especially with the internet, information has never been more free. All countries have access to advanced technological information as well as the vast corpus of economics literature on how to harness it for economic development and the good of their societies. Yet everywhere we look, developing economies are in chains. What is the problem? Another way to put it that we have always had competition among relatively free and intelligent people, but have not always had civilization, and have had the modern civilization we know today, characterized by democracy and relatively free economic diversity, for only a couple of centuries, in a minority of countries. This is not the normal state of affairs, despite being a very good state of affairs.

The problem is clearly not that of knowledge, per se, but of its diffusion (human capital), and far more critically, the social institutions that put it to work. The social sciences, including economics, are evidently still in their infancy when it comes to understanding the deep structure of societies and how to make them work better. North poses the basic problem of the transition between primitive ("natural") economies, which are personal and small-scale, to advanced economies that grew first in the West after the Renaissance, and are characterized by impersonal, rule-based exchange, with a flourishing of independent organizations. Humans naturally operate on the first level, and it requires the production of a "new man" to suit him and her to the impersonal system of modern political economies.

This model of human takes refuge in the state as the guarantor of property, contracts, money, security, law, political fairness, and many other institutions foundational to the security and prosperity of life as we know it. This model of human is comfortable interacting with complete strangers for all sorts of transactions from mundane products using the price system to complex and personal products like loans and health care using other institutions, all regulated by norms of behavior as well as by the state, where needed. This model of human develops intense specialization after a long education in very narrow productive skills, in order to live in a society of astonishing diversity of work. There is an organized and rule-based competition to develop such skills to the most detailed and extensive manner. This model of human relies on other social institutions such as the legal system, consumer review services, and standards of practice in each field to ensure that the vast asymmetry of information between the specialized sellers of other goods and services that she needs is not used against her, in fraud and other breaches of implicit faith.

All this is rather unlike the original model, who took refuge in his or her clan, relying on the social and physical power of that group to access economic power. That is, one has to know someone to use land or get a job, to deal with other groups, to make successful trades, and for basic security. North characterizes this society as "limited access", since it is run by and for coalitions of the powerful, like the lords and nobility of medieval Europe or the warlords of Afghanistan today. For such non-modern states, the overwhelming problem is not that of economic efficiency, but of avoiding disintegration and civil war. They are made up of elite coalitions that limit violence by allocating economic rewards according to political / military power. If done accurately on that basis, each lord gets a stable share, and has little incentive to start a civil war, since his (or her) power is already reflected in his or her economic share, and a war would necessarily reduce the whole economic pie, and additionally risks reducing the lord to nothing at all. This is a highly personalized, and dynamic system, where the central state's job is mostly to make sure that each of the coalition members is getting their proper share, with changes reflecting power shifts through time.

|

| Norman castles locations in Britain. The powers distributed through the country were a coalition that required constant maintenance and care from the center to keep privileges and benefits balanced and shared out according to the power of each local lord. |

For example, the Norman invasion of Britain installed a new set of landlords, who cared nothing for the English peasants, but carried on an elite society full of jealousies and warfare amongst themselves to grab more of the wealth of the country. Most of the time, however, there was a stable balance of power, thus of land allotments, and thus of economic shares, making for a reasonably peaceful realm. All power flowed through the state, (the land allotments were all ultimately granted by the king, and in the early days were routinely taken away again if the king was displeased by the lord's loyalty or status), which is to say through this coalition of the nobles, and they had little thought for economic efficiency, innovation, legal niceties, or perpetual non-political institutions to support trade, scholarship, and innovation. (With the exception of the church, which was an intimate partner of the state.)

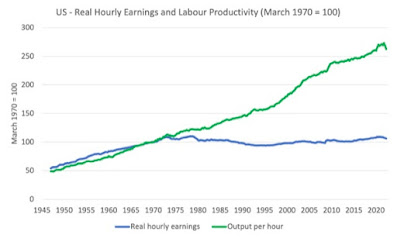

Notice that in the US and other modern political systems, the political system is almost slavishly devoted to "the economy", whereas in non-modern societies, the economy is a slave to the political system, which cavalierly assigns shares to the powerful and nothing to anyone else, infeudating them to the lords of the coalition. The economy is assumed to be static in its productivity and role, thus a sheer source of plunder and social power, rather than a subject of nurture and growth. And the state is composed of the elite whose political power translates immediately into shares of a static economic pie. No notion of democracy here!

This all comes to mind when considering the rather disparate fates of US military occupations that have occurred over the last century, where we have come directly up against societies that we briefly controlled and tried to steer in economically as well as socially positive directions. The occupations of Germany, Japan, Afghanistan, and Iraq came to dramatically different ends, principally due to the differing levels of ingrained beliefs and institutional development of each culture (one could add a quasi-occupation of Vietnam here as well). While Germany and Japan were each devastated by World War 2, and took decades to recover, their people had long been educated into an advanced instutional framework of economic and civic activity. Some of the devastation was indeed political and social, since the Nazis (as well as the imperial Japanese system) had set up an almost medieval (i.e. fascist) system of economic control, putting the state in charge of directing production in a cabal with leading industrialists. Yet despite all that, the elements were still in place for both nations to put their economies back together and in short order rejoin the fully developed world, in political and economic terms. How much of that was due to the individual human capital of each nation, (i.e. education in both technical and civic aspects), and how much was due to the residual organizational and institutional structures, such as impersonal legal and trade expectations, and how much due to the instructive activities of the occupying administration?

One would have to conclude that very little was due to the latter, for try as we might in Iraq and Afghanistan, their culture was not ready for full-blown modernity (elections, democracy, capitalism, rule of law, etc.) in the political-economic sense. Many of their people were ready, and the models abroad were and remain ready for application. Vast amounts of information and good will is at their disposal to build a modern state. But, alas, their real power structures were not receptive. Indeed, in Afghanistan, each warlord continued to maintain his own army, and civil war was a constant danger, until today, when a civil war is in full swing, conducted by the Taliban against a withering central state. The Taliban has historically been the only group with the wide-spread cultural support (at least in rural areas), and the ruthlessness to bring order to (most of) Afghanistan. Its coalition with the other elites is based partly on doctrinaire Islam (which all parties across the spectrum pay lip service to) and brutal / effective authoritarianism. When the US invaded, we took advantage of the few portions outside the existing power coalition, (in the north), arming them to defeat the Taliban. That was an instance of working with the existing power structures.

But replacing or reforming them was an entirely different project. The fact is that the development of modern economies took Western countries centuries, and takes even the most avid students (Taiwan, South Korea, China to a partial degree) several decades of work to retrace. North emphasizes that development from primitive to modern political-economic systems is not a given, and progress is as likely to go backward as forward, depending at each moment on the incentives of those in power. To progress, they need to see more benefit in stability and durable institutions, as opposed to their own freedom of action to threaten the other members of the coalition, keep armies, extort economic rents, etc. Only as chaos recedes, stability starts being taken for granted, and the cost of keeping armies exceeds their utility, does the calculus gradually shift. That process is fundamentally psychological- it reflects the observations and beliefs of the actors, and takes a long time, especially in a country such as Afghanistan with such a durable tradition of militarized independence and plunder.

So what should we have done, instead of dreaming that we could build, out of the existing culture and distribution of power, a women-friendly capitalist modern democracy in Afghanistan? First, we should have seen clearly at the outset that we had only two choices. First was to take over the culture root and branch, with a million soldiers. The other was to work within the culture on a practical program of reform, whose goal would have been to take them a few steps down the road from a "fragile" limited access state- where civil war is a constant threat- to a "basic" limited access state, where the elites are starting to accept some rules, and the state is stable, but still exists mostly to share out the economic pie to current power holders. Indeed the "basic" state is the only substantial social organization- all other organizations have to be created by it or affiliated with it, because any privilege worth having is jealously guarded by the state, in very personal terms.

Incidentally, the next step in North's taxonomy of states would be the mature limited access order, where laws begin to be made in a non-personal way, non-state organizations are allowed to exist more broadly, like commercial guilds, but the concepts of complete equality before the law and free access to standardized organization types has not yet been achieved. That latter would be an "open access order", which modern states occupy. There, the military is entirely under the democratic and lawful control of a central state, and the power centers that are left in the society have become more diffuse, and all willing to compete within an open, egalitarian legal framework in economic as well as political matters. It was this overall bargain that was being tested with the last administration's flirtation with an armed coup at the Capital earlier this year.

In the case of Afghanistan, there is a wild card in the form of the Taliban, which is not really a localized warlord kind of power, which can be fairly dealt out a share of the local and national economic pie. They are an amalgam of local powers from many parts of the country, plus an ideological movement, plus a pawn of Pakistan, the Gulf states, and the many other funders of fundamentalist Islam. Whatever they are, they are a power the central government has to reckon with, both via recognition and acceptance, as well as competition and strategies to blunt their power.

Above all, peace and security has always been the main goal. It is peace that moderates the need for every warlord to maintain his own army, and which nudges all the actors toward a more rule-based, regular way to harvest economic rents from the rest of the economy, and helps that economy grow. The lack of security is also the biggest calling card for the Taliban, as an organization that terrorizes the countryside and foments insecurity as its principal policy (an odd theology, one might think!). How did we do on that front? Well, not very well at all. The presence of the US and allies was in the first place an irritant. Second, our profusion of policies of reform, from poppy eradication, to women's education, to showpiece elections, to relentless, and often aimless, bombing, took our eyes off the ball, and generated ill will virtually across the spectrum. One gets the sense that Hamid Karzai was trying very hard to keep it all together in the classic pattern of a fragile state, by dealing out favors to each of the big powers across the country in a reasonably effective way, and calling out the US occasionally for its excesses. But from a modern perspective, that all looks like hopeless corruption, and we installed the next government under Ashraf Ghani which tried to step up modernist reforms without the necessary conditions of even having progressed from a fragile to a basic state, let alone to a mature state or any hint of the "doorstep conditions" of modernity that North emphasizes. This is not even to mention that we seem to have set up the central state military on an unsustainable basis, dependent on modern (foreign) hardware, expertise, and funding that were always destined to dry up eventually.

So, nation-building? Yes, absolutely. But smarter nation-building that doesn't ask too much of the society being put through the wringer. Nation-building happens in gradual steps, not all at once, not by fiat, and certainly not by imposition by outsiders (Unless we have a couple of centuries to spare, as the Normans did). Our experience with the post-world war 2 reconstructions was deeply misleading if we came away with the idea that those countries did nothing but learn at the American's knee and copy the American template, and were not themselves abundantly prepared for institutional and economic reconstruction.